You read an article that backs your opinion. You nod along, share it, and feel vindicated. Another article says the opposite — you scoff, roll your eyes, question the source. Same topic. Same data. Different framing. Different reaction.

It feels like discernment. It’s often just confirmation bias.

We don’t just look for truth. We look for what fits.

What is confirmation bias?

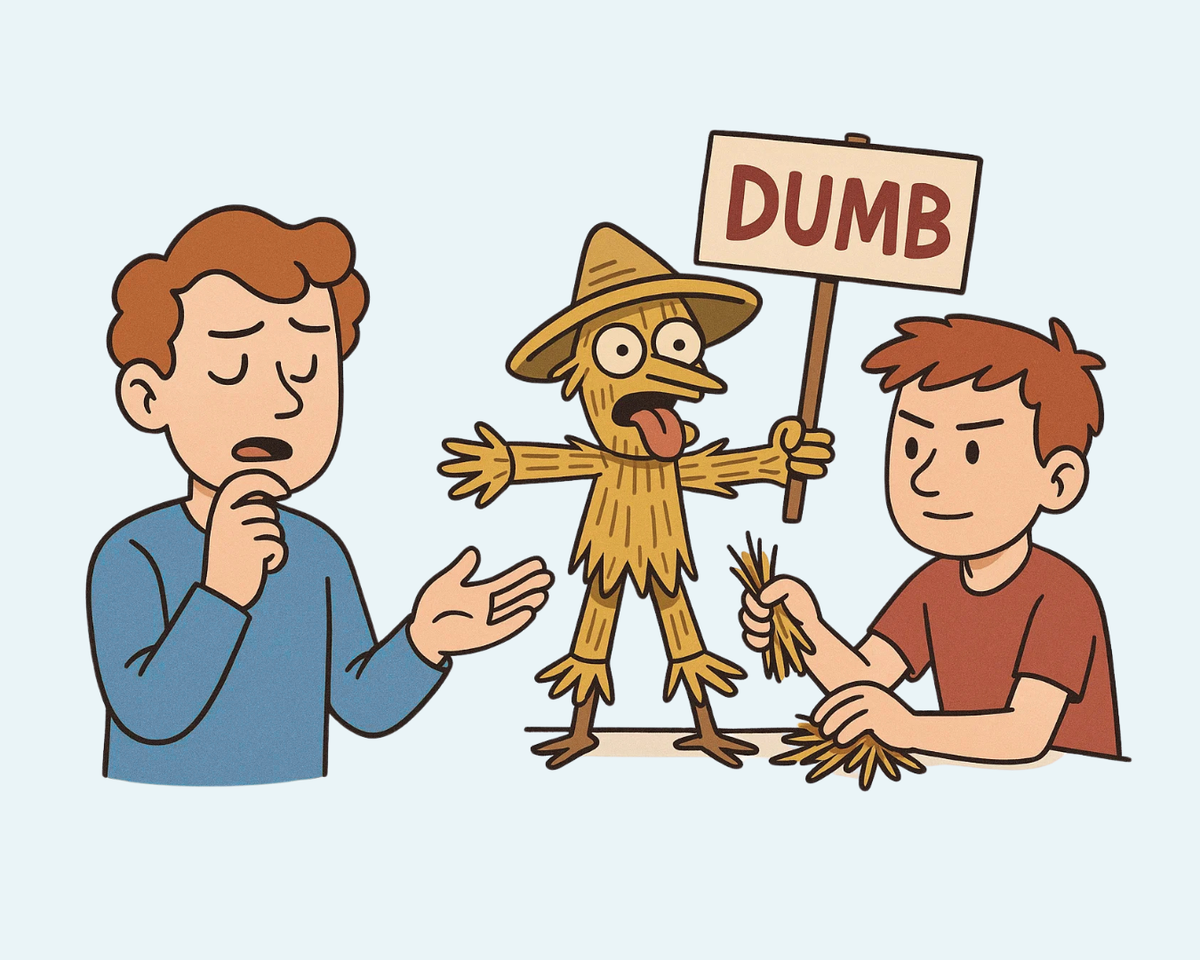

Confirmation bias is our tendency to seek out, interpret, and remember information in ways that confirm what we already believe — and ignore or dismiss anything that challenges it.

It’s not a flaw in a few people. It’s a feature of the human mind.

We all do it. We follow voices that agree with us. We filter facts through our existing worldview. And once we believe something, we become very good at defending it — and very bad at seeing around it.

Why is it so persuasive?

Belief feels like clarity. Doubt feels like danger. So we cling to the things that reassure us — headlines, hashtags, hot takes. Our brains reward coherence, not contradiction. It’s more comfortable to double down than to reconsider.

This is why people can read the same news story and walk away with opposite conclusions. It’s why conspiracy theories flourish in echo chambers. It’s why even smart, educated people fall into intellectual ruts.

Confirmation bias is especially dangerous in the age of algorithmic information. Our feeds are tuned to show us what we want to see, not what’s most accurate. The more you click, the more the system narrows. And before long, it feels like everyone agrees with you. Because everyone you see does.

This isn’t just a bug in the brain. It’s a threat to collective reasoning.

The antidote to confirmation bias

The cure isn’t to stop having opinions. It’s to hold them with humility.

Be curious about being wrong. Seek out disagreement that’s thoughtful, not performative. Read things you reflexively disagree with — not to argue, but to understand. And when you encounter something uncomfortable, ask: what if this is true?

Truth rarely arrives pre-packaged. It’s uncovered, tested, refined. Which means it can’t grow in a closed loop.

Thinking well means checking your own assumptions — not just cheering the ones you already hold.

Quick summary

Confirmation bias is our tendency to notice, believe, and remember information that confirms our existing views, while ignoring what challenges them. It’s natural, but dangerous. Clear thinking means questioning not just others, but ourselves.

FAQ: Confirmation bias

What is confirmation bias?

It’s the tendency to favour information that supports what we already believe, and to ignore or undervalue information that contradicts those beliefs.

Is it always bad?

Not always — it’s a mental shortcut that helps us make sense of the world. But unchecked, it leads to closed thinking, polarisation, and poor decision-making.

What are some examples?

- Only reading news from sources that agree with your politics

- Ignoring criticism of your favourite public figure

- Dismissing evidence in a debate because it “must be biased”

- Remembering the times your lucky socks “worked,” forgetting the times they didn’t

How can I avoid it?

- Actively seek out smart disagreement

- Ask: what would change my mind?

- Practice intellectual humility — and don’t confuse certainty with clarity

Is confirmation bias the same as critical thinking?

No. In fact, it’s the opposite. Critical thinking requires examining all the evidence, not just the parts that feel good.

Further reading

Thinking, Fast and Slow

by Daniel Kahneman

A foundational look at the two systems of thought that shape our judgments — and how biases like confirmation bias creep into even our smartest decisions.

The Scout Mindset: Why Some People See Things Clearly and Others Don’t

by Julia Galef

A refreshing, practical guide to replacing motivated reasoning with intellectual honesty. Essential reading for anyone trying to see more clearly — even when it’s uncomfortable.

The Believing Brain

by Michael Shermer

Explores how beliefs are formed — and then reinforced through confirmation bias. A sharp blend of neuroscience, psychology, and real-world examples.

Being Logical: A Guide to Good Thinking

by D.Q. McInerny

A concise, elegant introduction to sound reasoning — ideal for spotting (and avoiding) your own thinking errors.

Mistakes Were Made (But Not by Me)

by Carol Tavris and Elliot Aronson

An engaging, psychologically grounded look at self-justification, denial, and how confirmation bias helps us defend even our worst choices.

Enjoyed this? Go deeper with our free Thinking Toolkit

A 10-part course to help you think more clearly, spot bad arguments, and build habits for sharper reasoning.

You might also like...